The latest cognitiveSEO Talks episode – On search and Traffic – brings to your attention Dan Petrovic, a digital marketing from Australia who runs a company called Dejan. Except being a great guy with an experience of over 16 years in digital marketing, Dan is quite involved in understanding how people consume news content online.

The director of Dejan shared with us loads of tips on how to write content like a pro, how to make the most out of Search Console plus tons of great insights on Google and its ranking factors. We don’t want to spoil the pleasure of discovering great ideas yourself, so hit play and enjoy the talk!

Dan’s knowledge and expertise will surely captivate you. We could keep on talking about how great he is personally and professionally but we believe that the shortest distance between two people is a story. Or an inspiring talk in our case. So grab a pen and a notebook and let yourself inspired by this interview.

Razvan: Hello everyone! Welcome to cognitive SEO Talks. Today we have a special guest from Australia, Dan Petrovic. He will say more about him and he will introduce us to the world of content, content marketing, and SEO as he sees it in 2018. Dan, welcome, the microphone is all yours!

Dan: Thank you very much! It’s been a while since we’ve seen each other. It’s a pleasure to be with you again.

So, I’m Dan from Australia, and run a company called Dejan marketing – that’s all you need to know about me. If you need to find out more, you can google it. In the last two-three years I have been quite actively involved in understanding how people consume a news content online, and during this time, I’ve developed a whole range of new ideas and thoughts on how content should be structured and done in a context of user experience, usefulness SEO strategy data, and all sorts of wonderful things to do with digital marketing. So I’m sure we’ll cover a whole lot of interesting topics today.

Razvan: Sure we will.

What do you think is unique in your approach to content when you are applying it to your Dejan clients?

Dan: Absolutely nothing. But there’s a catch, obviously.

So what’s the catch?

Dan: What is absolutely not unique about how I do content is that the method that I apply has been used for many many years. It’s called “inverted pyramid” and it’s been used in the world of journalism for quite a long time. We can talk about it in detail, but essentially the TLDR version is – and that’s an important term to remember “too long didn’t read”; that’s the generation we live in – is: Start with the answer, with your big news, deliver on the promise of your title. See, if you say that you’re gonna say something in the title then your first sentence should deliver on that promise to explain the most salient points in your opening sentence. Don’t delay, don’t give your goodies halfway down the article or the bottom of the article.

So the second thing is once you’ve highlighted the main point of what you’re trying to say, your main idea, then you go into the details to support the main notion, the main idea, the main discovery, or the piece of news. Everything else that goes after that could be described as fluff. So, main point, secondary items, tertiary information. In essence, that’s how content should be written for the web, otherwise, people will simply not read it. It’s interesting because the research that I did was two decades after Jakob Nielsen did his research and we’ve come to the same conclusion. After surveying a thousand online readers, I’ve come up with a percent that he did 20 years ago – it’s 16%. 16 % of readers actually read everything word-for-word, the rest will just skip.

Razvan: That’s a big percent. I think it’s a big percent. If you’re writing an article that has about 5,000 words and 16% of the readers are reading it completely, then it’s a big, big achievement.

Dan: How sad is that? How sad it is that you have to say that you’re happy with 16 percent of your audience actually reading the article that you put your …

Razvan: Yeah, that’s the reality of the web and the content and the scanning, and not reading everything.

Dan: The scanning is where it’s at, you know? People will scroll all the way to the bottom of the article, skip the entire body of the article, and go to the comments, because often in the comments you can quickly get a summary of what the article is about, and people do that. Now, this doesn’t apply to written word; this applies to basically every piece of content that doesn’t get to the point, that doesn’t deliver on its promise, including video. And that’s why we’ve had that little chat before this live recording where I said “Let’s not do a long intro because people will skip it anyway. Let’s just get into the subject matter and talk concrete things”. So what’s unique other than following the inverted pyramid and being helpful in terms of the format that people expect…

There are some other ancient tricks – but really ancient – and one of them is put one idea or one thought into a paragraph; don’t bundle two ideas into the same paragraph. And this goes back to what you mentioned about scanning. So imagine the user is reading an article, they hit a paragraph of text in within that article, now this paragraph is trying to sell itself to the reader, “please read me”, and if the reader decides that this paragraph is starting with something that they’re not interested in they will automatically skip to the next paragraph assuming but the next main idea is in the second paragraph, or the third, or the fourth, and so forth. So if you’re writing about two distinct ideas or two distinct subjects, and you bundle them within the single paragraph, one will be lost because they’ll jump to the third idea in the second paragraph. And that makes sense.

So when writing for the web, follow the inverted pyramid, split your ideas into indistinct paragraphs, use bullet points, bolding, headings, all the good principles of scanability and usability, and, in addition to that, make sure that the article actually delivers on its promise. So that’s quite important. Now the final thought, I guess, on how am I different? I really feel like the world doesn’t need any more content – there’s too much of it already.

So there’s news – news is always useful, evergreen content is useful, you know, how to solve problems, this and that, but I think in our landscape in digital marketing too many people are pumping out content just for the sake of Google rankings or traffic, so they have a weekly schedule, you know, “it’s Tuesday, so we must publish an article because, you know, we publish articles on Tuesday”. My role when I’m writing or when I’m doing work for our clients it’s when there’s something to write about, not because it’s Tuesday and it’s your schedule. If we all keep in mind that there’s too much content out there and if there’s no other good reason to push content out, then there should not be content pushed out. It’s as simple as that. So we could talk about this maybe a little bit later but essentially before I decide to create a piece of content for my clients, that piece of content has to be defended by reason and purpose, not only newsworthiness but by the data.

Razvan: How do you find the reason? Because, normally, if you have a client you can’t say to him “We’re not going to publish anything because I don’t think it’s anything worth publishing”. It’s your purpose and scope to find that, and improve their rankings, and bring more traffic, and do all this stuff.

How do you research all this stuff, and how do you find the exact topic that you’re going to write on?

Dan: Topics are really hard, and that’s, you know, that’s the little bit of sprinkling of the magic that comes into what just happens as creativity. But I don’t leave things to creativity or chance. A good idea for an article could strike me in a shower, while I’m sleeping or driving my car, or a just completely random situation.

Razvan: Yeah, I totally agree, but it’s not scalable.

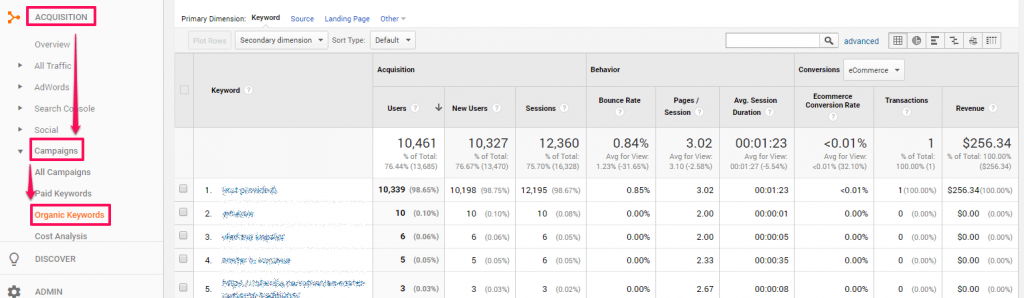

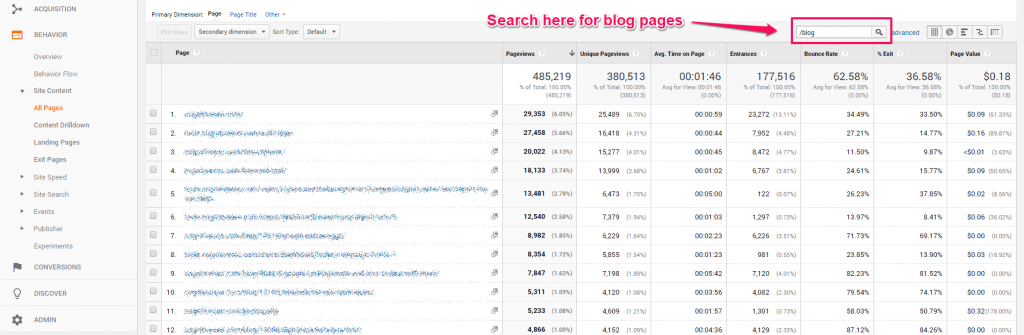

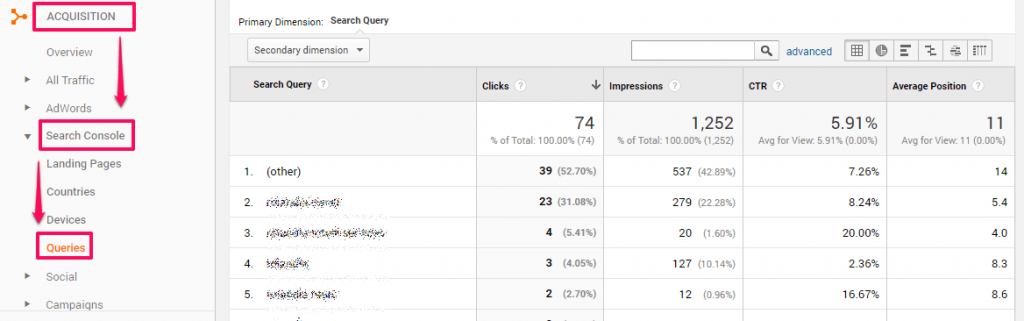

Dan: Yeah, they should invent like waterproof notepads for your shower. There’s a whole subreddit called “shower thoughts”, because you that’s your meditative space. It’s very rarely that I sit in my office and I say “Alright, I’m gonna come up with a great article idea for my client!” and within these half an hour – one hour I come up with great ideas – I never do it. This just doesn’t happen like that. Creative thought takes time and it’s a little bit mysterious to me. So I leave that aside, the ideas come to me when they come, and I just write them down. But I think the big exercise and the responsibility of a digital marketer is to advise not only on opportunities but also be able to sort and prioritize different activities, and same goes with content. Let’s take an e-commerce site: you know, these guys are retailing ten thousand different products in hundred different categories, from electronics to skincare, and you’re trying to decide as a content marketing agency or an SEO “What do we write about next?”. So your decision could be influenced by maybe something trending as a piece of news; you spot something interesting being hot on Reddit or in Google Trends – although Google Trends is pretty slow at capturing hot stuff -, so you can be inspired by that, but my process is a much simpler than that. When I try to determine what to write about I look at the Search Console data, and look at a wide range of keywords, not like ten arbitrary keywords, maybe a hundred, I look at the whole twenty thousand keywords, if such exists, and … Do you use API with Search Console yourself?

Razvan: Mmm, via API?

Dan: Yeah.

Razvan: No.

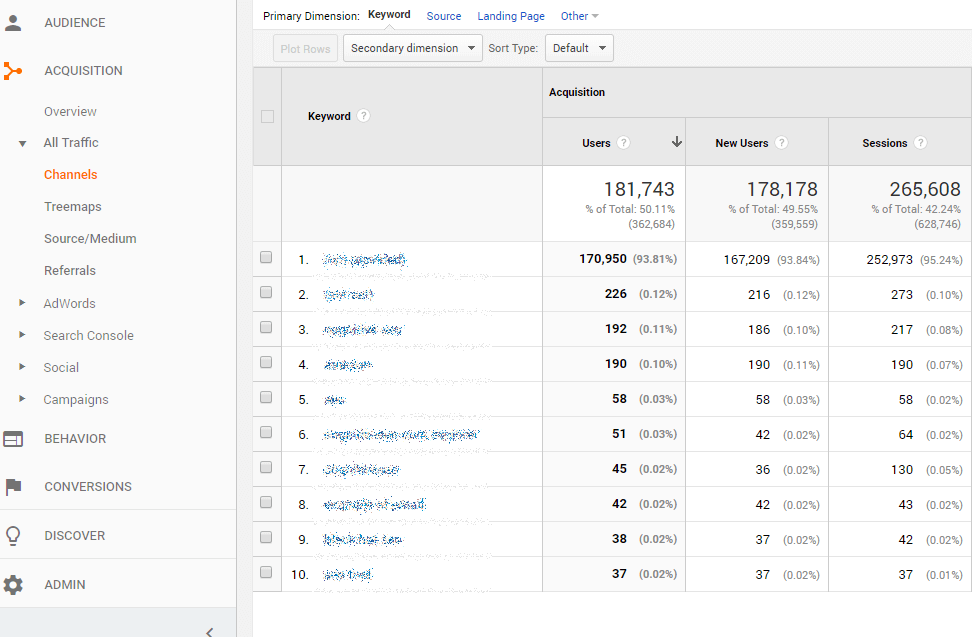

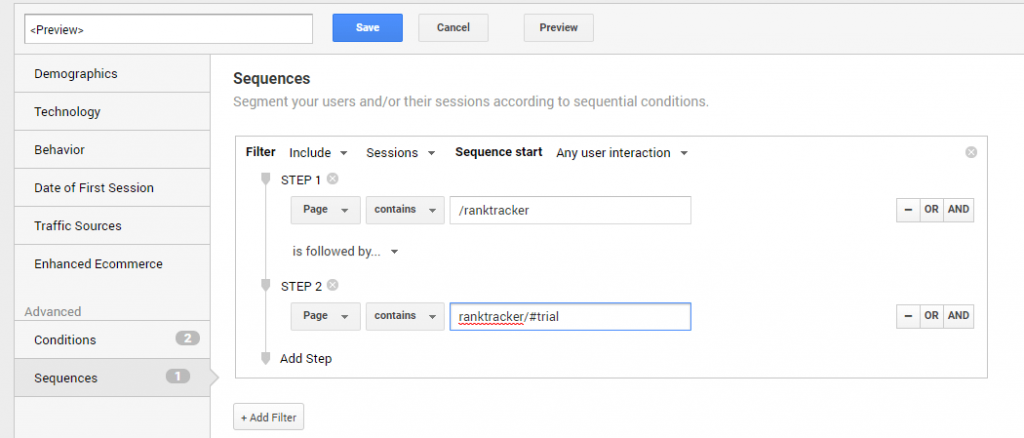

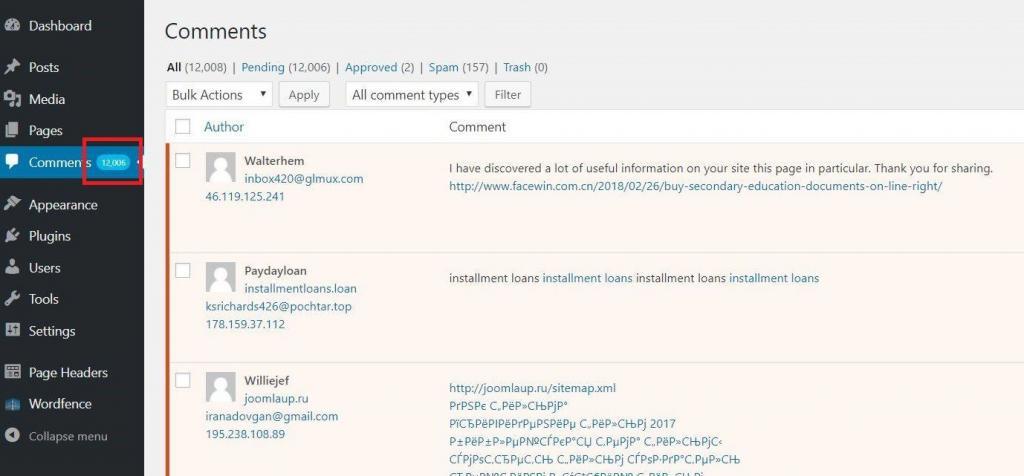

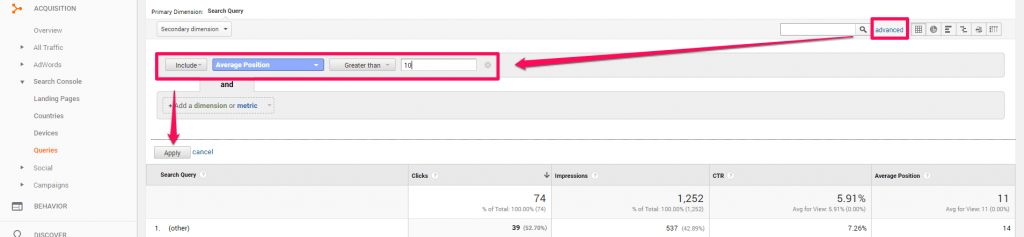

Dan: Right. I have a tool that does API calls to Search Console, but I primarily (when I’m just doing manual work) I just do CSV exports. The problem with Search Console is that the CSV export is limited to a thousand lines, and to get really all the keywords out of the Search Console you have to do a little bit of a hack. So what I do is… What you will notice is that if you minus (-) your brand, for example, out of the Search Console, you’ll still get a thousand keywords export. If you minus letter A, you’ll still get a thousand keywords export.

Razvan: And you mix everything in the end.

Dan: You could do for each letter of the alphabet you could minus (-) A, minus B, minus C, and in the end, just do a merge command in DOS and just merge it into a single CSV file, deduplicate the whole file, and voila!, you’ve got the full range of different queries for that particular domain.

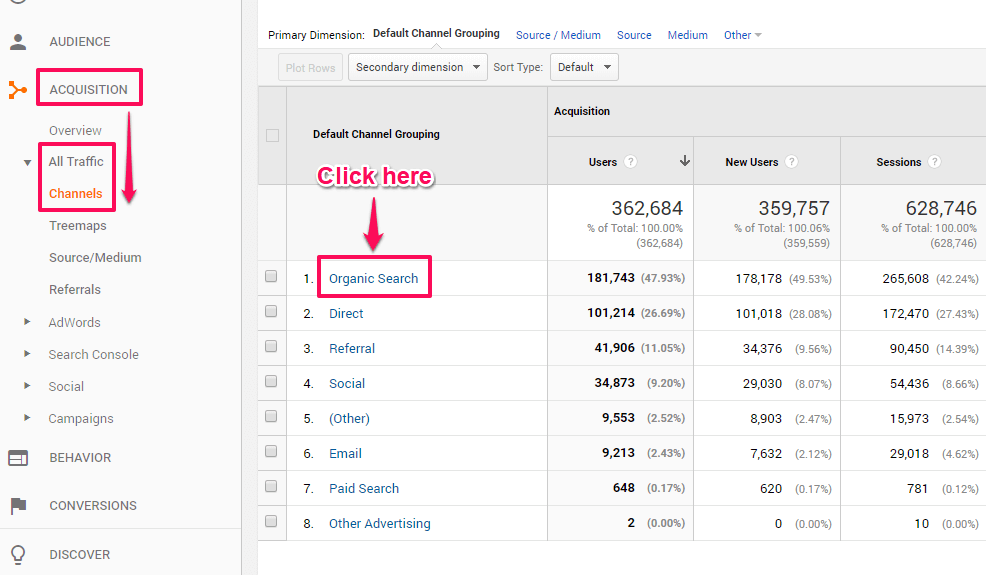

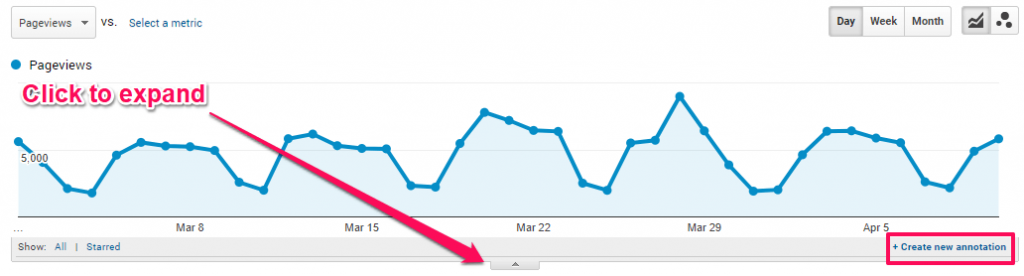

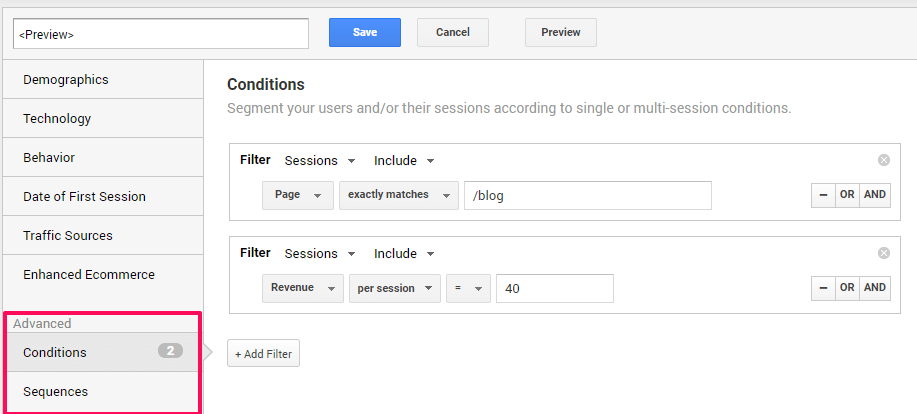

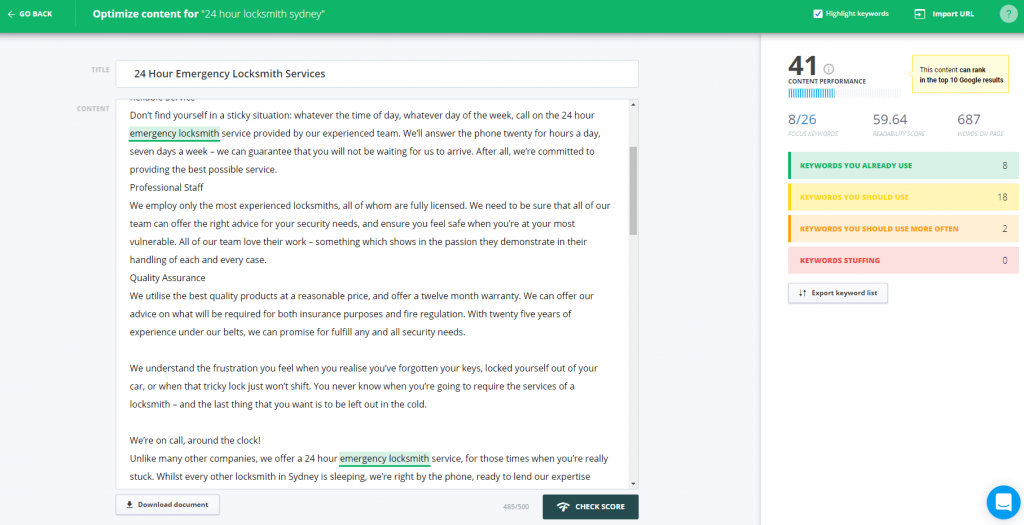

Now little hack aside, you look at those keywords and it’s a very long spreadsheet; it doesn’t mean anything. You’re just looking at keywords, rankings, CTR, and so forth. So, for me, the next step is understanding what has potential and I do that in two ways: first is I try to understand if something has potential to get more clicks if it moves up in rank, and I like to calculate how many more clicks will I be getting if something moves up. Certain keyword will move one position up and it will get a hundred extra clicks, the other keyword will get five hundred extra clicks if it moves one position up – they’re all different, but then goes the difficulty, as well, for that particular keyword, and also a grouping of those keywords and mapping them to different URLs. So one distinct URL could be triggered by 12 different keywords or queries in Google. So what I do is I like to understand the cumulative traffic for every quick query that leads to that particular URL and understand the movement impact on traffic, and that’s really easy to calculate. If you understand your CTR distribution curve, 30% for number one position, 20% for two, and so forth, and not by some sort of arbitrary industry standards, judging by the data of your own website – excluding the branded terms, because your branded terms will have 80% click-through rate and such, that’s not to be factored in – so you just look at, statistically, if something is on position 3, what’s the click-through rate. So you cannot calculate what your clicks will be on position 2. So that’s a really really simple type of work, understanding how pages will benefit from movement in the rankings. Now you suddenly understand which keywords to focus on and which pages to focus on. And most people make a mistake of writing more blog content, completely disregarding their commercial landing pages, the quality of content on their product pages or services pages, and I think us, as an industry, need to focus more on that and less on pumping out more blog stuff, because that’s where the links go towards high-quality stuff. And one other exciting thing is – in terms of not just content, but also general optimization opportunity – is understanding with something has a very bad CTR. Let’s say, you’re on number 1 position but your CTR is like 8%. You would want to investigate that. Let’s say that that query has a hundred thousand impressions, and you’re only getting 80,000 clicks on it, or like 8,000 clicks on it, it’s like really really low. You would want to investigate that straight away because that’s something you’re losing traffic on. So content, in this case, is not the content of the page itself, it’s the content of your title and the content of your meta description, which I think need rigorous and ongoing testing process until the website is completely satisfied that they’ve found the optimal title and description for that perfect snippet, and then experimentation with schema and other mechanisms. One that’s recently popped up and is not, I think, not utilized as much as it could be, is the featured snippets, the zeroth result.

Did you try to do A/B testing on titles for pages, and how did you do it in terms of not affecting the rankings in a negative way on the long term?

Dan: Absolutely. The solution is simple: you keep your core keywords – because we’ve done the keyword research, we looked at that Search Console data, we know what keywords need to be there, so we playing with all the other factors. There are elements of a title tag that will increase the click-through rate without changing impact of the ranking of the main or of the primary keyword. One example is addition of a price in the title. Let’s take, for example, a service like cruises, “cruises to New Zealand”, right? So that’s a title and CTR is 10%. Suddenly, we put “cruises to New Zealand from $1600” – suddenly we’ve seen 12% click-through-rate because we added the price. It could be the other way around – we could have the price in there and the CTR drops because people are “ah, too expensive, I’m not gonna click on it”. It doesn’t matter.

Razvan: It’s tricky to test because when you change it from “cruises to New Zealand” and you also have the price, for example, your rankings might also change, and you cannot measure the CTR correctly.

Dan: You only need to measure the CTR on the average ranking position. The absolute position doesn’t exist, we know that. If somebody searches from their mobile phone or tablet, or logged in or log out, if somebody searches from Sydney, Melbourne, Brisbane, completely different result.

Razvan: Yeah, correct, but you can lose positions and lose from an average of 1,5-2,5 for example to an average of 2,5 or 2, and then you cannot compare the CTR with what you had before, because it doesn’t make sense. You’re on position 2 on average, so your CTR normally it’s lower.

Dan: But you still can. Let’s say your keyword is ranking on position 5. It has 10% click-through-rate, you change the title tag, the CTR increases but the rank drops, the rank drops to position 6. You’d expect 8% click-through-rate on your position 6, but you’re getting 10% click-through-rate – that’s 2% above the expectation. So you can always notice deviation from the norm. So regardless of what position the keyword is on or the page is on for that particular query, you can always understand how the adjustment and the title have impacted its performance on that particular position; so even if it drops, you can always understand if the CTR has been increased. Of course, that doesn’t help with the fact that you’ve dropped the position, but from what I’ve been doing, I have rarely seen a drop in the ranking on the basis of adjustment of the title.

Razvan: Obviously, when you change it, you change it with the intent of increasing the CTR and increasing the ranking. Both might happen or both not.

Dan: That’s with titles. So, with meta descriptions, you can literally do whatever you want and it has absolutely no impact. But the title is what people pay attention to first, that’s a given. And in some instances… So price is #1, rich snippets is another one, presence of a well-known brand – sometimes websites think “Well, my brand is in the URL, why do I need to insert my brand as a variable in all my titles?”. But for big brands, you know, like Expedia or Nike, if you put the brand in the title, that actually adds to the confidence and trust of the user for that particular search snippet. So it can be beneficial. For a lesser-known brand, removing of your own brand could increase the click-through-rate.

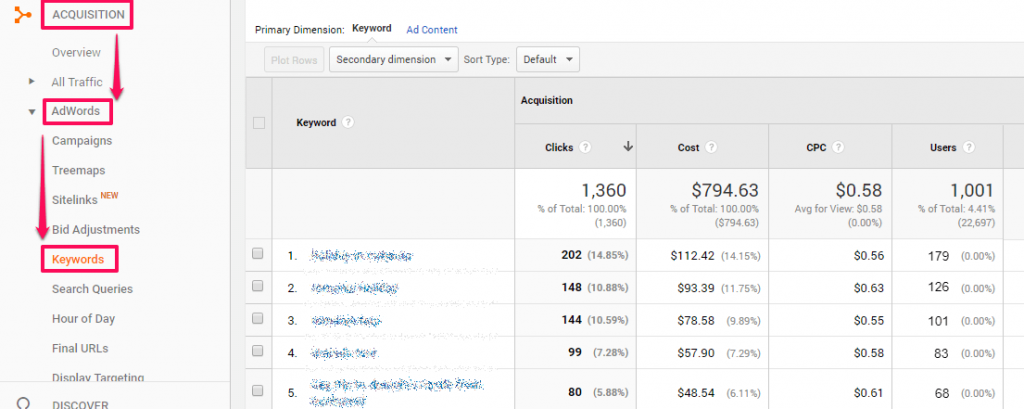

Did you try to also use Adwords for A/B testing, for example? Because it may be easier to test using Adwords and see what exactly is the perfect title and description for a page on a specific keyword and then try to apply that on the actual ranking page?

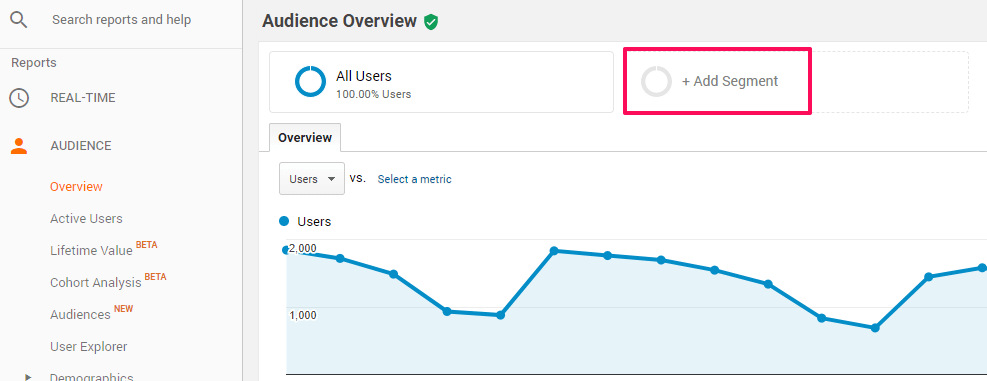

Dan: Yeah, that’s what’s going on at the moment. So we’ve got a client who is running a very very elaborate and quite an expensive AdWords campaign with a lot of keyword volume, so they’re in an educational space, they’re an .edu domain and we’re currently easing with their PPC department, obtaining all the data and understanding not just instances of highly performing CTRs, but we’re trying to understand the rules: what in general increases the CTR? So the next step after that will be if we have a hypothesis we quickly tested with Adwords, once it proves successful we again test it in organic. The problem with this is that behavior between organic and AdWords is slightly different, it’s not dramatically different, but it is different. So it’s good for quick hypothesis testing and rolling things out into organic, but the testing must continue in the organic results as well. I don’t have a fancy framework for testing at the moment, it’s just a Google spreadsheet and monitoring the impact of different experiments on the CTR. So combining the rank increase and combining the CTR optimization getting more traffic to the pages that need that increase in the order of priority, obviously. So where does content come into this? Well, the pages that you’ve highlighted as the ones with the highest potential to grow and bring you clicks – of course there’s a third layer to the whole research, and that is applying the average conversion rate and the average or the goal value for that particular page, or in general, so you can attach monetary reward and understand how much more revenue the business will generate in different scenarios, whether the scenario will be to optimize the CTR, to be to the click-through-rate where we should be; as per the average, or the scenario where we increase the rank or both. I think that’s a nice piece of information to show to your management.

Do you think CTR is a ranking signal? I think you wrote an article or mentioned an article in the past about how the users’ behavior is a ranking signal and how click-through-rate is actually a ranking factor. Do you still believe this today, has your opinion changed regarding this?

Dan: There is no belief that this is a well-known fact and I think Google’s been quite sneaking the way that they talk about CTR. There is absolutely no shadow of a doubt that CTR is a ranking signal. CTR is not only a ranking signal, CTR is essential to Google’s self-analytics, I suppose. It’s one of the core metrics that Google or other search engines use to improve the quality of their search results and this is not limited to Google, all the other search engines use that as well. Bing and Google, they did joint research, Microsoft and Google did joint research, and Yahoo as well, to understand how position bias impacts the click-through-rates and they did research about how bolding of the keywords that match the query on the snippet impacts the user selection and impacts the CTR. So as a result of one of those papers and patents at a later date, Google removed the bolding of the search terms matchings users’ search query from the title in the snippet.

And you remember Google from maybe 5-6-7 years ago – the keyword was bolded in the title. Two years after that paper came out showing bias in users’ selection based on the bolding in the title, they actually ended up removing it. So I think that the article I published was on Moz and it was called “User behavior data as a ranking signal” and as for those who are curious about that, you can google it and get to that article, it’s loaded with proof that Google not only uses that but really relies on CTR as one of their essential quality signals.

Now one thing to understand is that Google also owns Chrome and that they get a variety of data from Chrome as well, and if he is not convinced, you can simply type in “Chrome:// histograms” in your address bar and you will open a file that’s been recording everything you’ve ever done in Chrome, including opening tabs, closing tabs, bookmarking, copying URL, starting to type URL, completing the URL, hitting enter, swiping text, tapping, scrolling, everything! So it’s a large text file that gets compressed and, if you’ve ticked the box, gets sent to Google for quality purposes. So user behavior data is essential to Google’s self-improvement. Where people get it wrong is where they think that they can go to some website and inflate the click-through-rate for that particular page and suddenly expect higher rankings for that website. This is not a real-time signal; Google simply uses it to improve the systems, not to in real-time manipulate the authority of a website much like a link graph would or something like that.

Razvan: You know, I’ve noticed in the past, on some blackhat boards, some negative SEO in terms of clicking the URLs on a specific page. Let me give you an example: so they roll some ads, mini ads where people were paid a few cents for clicking on result pages for an already given keyword and it sounded like this “search for this keyword and then go and click on any of the results there, except a specific page there”, which was targeted to be delisted from the top 10 and “when you click on the on the results that you’ve chosen, go and scroll and navigate inside the page. So, practically, they were doing negative SEO to a particular page and that page didn’t see anything coming, so they didn’t see any click, and doing this consistently they practically were able to manipulate the rankings and drop the page or the set of pages from the top 10.

What do you think about this? Have you seen this, do you have more information that you want to share with us about this technique?

Dan: I’m skeptical simply because black hats are not really known as scientific types. Google document their testing process and they want the results to be true, so they are true in their findings.

Razvan: Yeah, they were paying for the stuff to be … and I can tell you that I saw on blackhead boards this kind of announcements on a variety of keywords both for negative SEO and also for … there were ads like “Go to this particular page” and practically they were improving the rankings for a particular page by doing the same thing “Go to this page, search for this, go to this page, click inside the page, start navigating and that’s it, your job is done, you can get your two cents-five cents, whatever they were paying for this in order to increase the CTR but also show to Google that there was activity on that particular browsing session. And this was done for months, continuously depending on the budgets of the people that were trying to manipulate.

Dan: So here’s the thing. For the last two years, I haven’t been as prolific with my writing and sharing, and this and that. I’ve been busy. But I haven’t stopped experimenting and one of my experiments was with Mechanical Turk and I’m talking large numbers of Mechanical Turk users in an attempt to use user selection to attempt to manipulate the results. I’ve run the tests persistently, continuously on a large number of users, for different pages and different queries, and I haven’t seen any impact. Now I have been using Mechanical Turk, so that’s perhaps the weakness of this experiment: it was cheap to run but there may have been known IP addresses and, you know, before what it’s worth there was a large group of users participating in the experiment and I haven’t seen anything interesting other than the data showing up in the Search Console, so the only thing I’ve actually manipulated was the key queries in the Search Console and the click-through-rate for that particular test query. So it’s worked, Google’s bought it, and then it’s peaked, and I see it in there, but has done absolutely nothing, not only in terms of the rankings but also hasn’t done anything in terms of Google Suggest or the related queries at the bottom of search results, which was part of my test as well. I was trying to manipulate the Google Suggest for some circle queries and it hasn’t worked, unfortunately.

Razvan: Many people claim success on manipulating the Google Suggest in the past but I don’t recall now the exact suggest that they’ve manipulated, but it was newsworthy. Sites wrote about the manipulation. I think there was something also in Romania done by someone and it was acknowledged that results shouldn’t be there, and the suggest didn’t make sense to be there. But it was a couple of years ago so maybe now Google has their algorithms better, for sure.

Dan: Well yeah, I’m sure they’re improving because they’ve got a whole team in charge of the suggests, but I know that suggests can be manipulated but it takes really large volumes of people to trigger it.

Razvan: It depends on what you’re trying to manipulate, probably, because if there are queries which have low volume then Google should relate to that lower volume… If you’re trying to manipulate for a keyword like “CNN” if you’re trying to manipulate for a keyword which has a couple of hundreds of searches per month maybe you have more chances there. But let’s talk about something that works for you now.

What SEO technique is working for you now exactly? And if you can share some of the technicalities about it.

Dan: Yeah. I’m generally internally criticized within the company, criticized for oversharing and we have one particular method that’s working rather well but my guys have asked me to embargo the method so we can milk the benefits for a year or two. I will not keep it a complete secret.

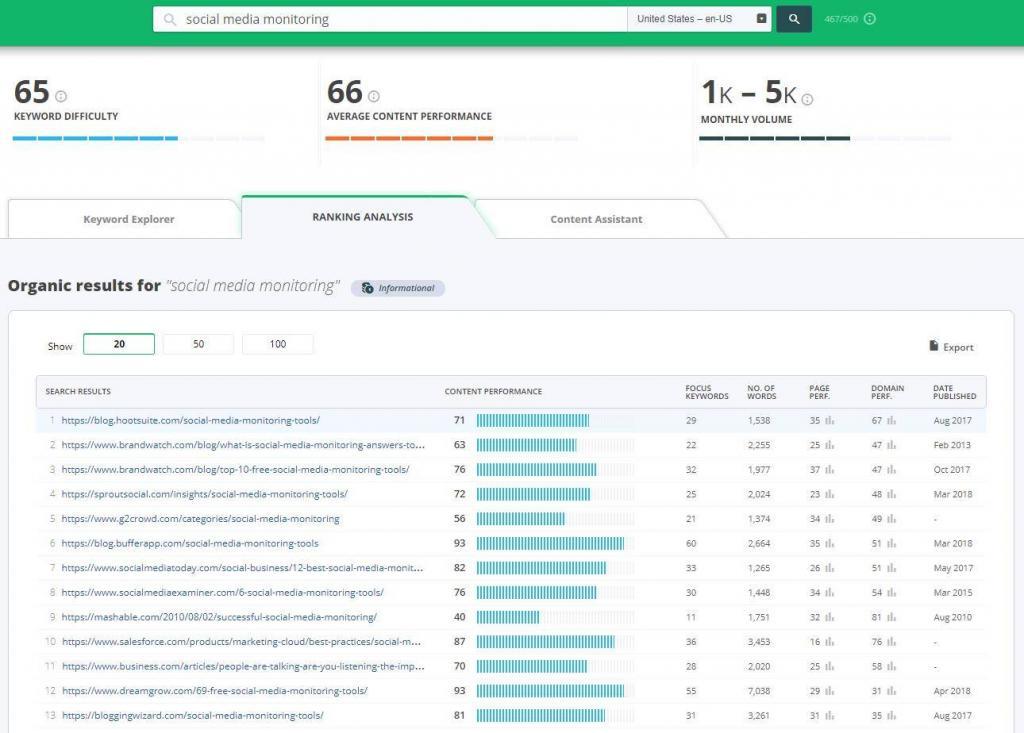

I think in essence it’s the problem that we’re consistently finding with all our corporate clients. So this is typically large websites that have very successful blogs, hide the main authority, and what’s happening is that these websites have … all their link equity is coming from blogs and news and whatnot to their blog content which is great, high quality pages, but if you look at their money pages – zero links, because they’re just boring. There’s absolutely nothing of value on the pages. So if you’re selling cars, you know, if you’re a Toyota website and that’s your latest model, so you’ve got your specifications and this and that but other than that there’s nothing on it. I think the worst case I’ve seen was a website I was selling car seat covers – how boring is that, you know? It’s a seat cover, you wrap it around your seat and that’s that. So currently we’re busy with working with our clients’ money pages in improving the content and linkability of those pages.

Something – this is not a new thing for us, it’s working really well, but it’s not a new thing, we’ve had attempts in the past – one of the methods was to run a competition page, not on the separate competition page but on the page of the product that you’re giving away. So, let’s say you’re selling glasses and this is the model of the glasses and you’re giving away ten pairs in the next month to the lucky winners – don’t create a separate competition page, create a competition page on the glasses page itself, of the product you’re giving away. It could be on a separate tab or an accordion or whatever mechanism, just structure it so it’s not detracting from the purchase, but if people land on that competition page, hash competition (#competition) at the end of the URL jumps straight to the competition rules. So it’s time to announce the winners – what do you do? Do you send them to the winners’ page? No, you don’t. You show the winners on the page again, where you’re selling the glasses, the page where the pair of glasses can be purchased from.

So you see how we’re reusing the main product page, not only in terms of improving the content – that’s what I’m doing now, but the old method was to create publicity interest for the landing page itself. So that’s one nice method that works and people are just ignoring it. So, basically, you know, we’ll try to generate links, but links to link commercial landing pages are really hard. So that’s the problem we’re solving at the moment – we’re working towards increasing the quality of the content to such a point by using data, statistics, new research, really useful stuff, tools, tables, downloadables, really reaching the pinnacle of usefulness for the product page or the service page to such a level that you can actually do outreach for that page and a blogger might actually link to it because there’s something of such high value on that page that they find it very useful.

The problem that I’ve run into when doing this when working on the highest possible quality landing pages, is that they tend to be quite big and there’s a lot of stuff going on and that tends to detract from the purchasing part. So we’ve got a problem now and we’re trying to create a linkable asset that’s our landing page, which would otherwise be a blog post, a completely irrelevant page to which we don’t need links, but on the other hand we want our conversion rate to be very high and if you have too many distracting elements your conversion rate will drop. Every next element the add is one more point against your conversion. So, like I mentioned earlier, utilization of tabs and accordions and other devices to structure the layout is helpful in this context, except Google’s desktop index is still being funny about tabs and accordions and such devices, anything contained within the tab or accordion will be indexed but not ranking. So you could literally – (and I did this experiment) I published a poster post on Moz, then I copied that post on my own website; Moz is high-authority mine is lower-authority but I did one thing: I expanded every hidden content on my version and Moz’s blog post had little clickable elements to expand the bits and pieces; guess who ranked first for the expandable pieces? Me, for essentially duplicate content. So, essentially, what’s happening right now is landing pages for very important products that contain information behind a tab or an accordion or otherwise a hiding element, that content will not rank at all. In fact, scrapers, dirty scrapers with low domain authority will outrank it if they display that content on default. So, that’s something to keep in mind.

Mobile? Not a problem. Gary Illyes, John Mueller have said that they don’t have a problem with hiding elements of content on mobile devices, that’s good user experience, and Google uses accordions themselves in the search results as related questions, and I cannot fathom the hypocrisy of it, because they’re punishing webmasters for utilizing such elements by not ranking content, yet they’re employing that piece of user experience themselves. So mobile-first fine desktop – not so fine. So you’ve got that issue of conversion optimization versus content richness, and that’s something that we’re currently working on.

What do you think is a top mistake that SEO people are doing nowadays?

Dan: Writing useless content on the blog – more blog posts! Let’s build some links for that stuff, yeah. I think another thing is chasing links ignoring the technical apt SEO basics, like what we’ve discussed earlier, optimizing your click-through-rate, improving your titles and descriptions, that’s one of the most basic SEO things people completely ignore.

How hard do you think is SEO now compared to three years ago?

Dan: Three years ago – not so different, but 5-6 years ago, yeah, quite different.

How much? Twice or…?

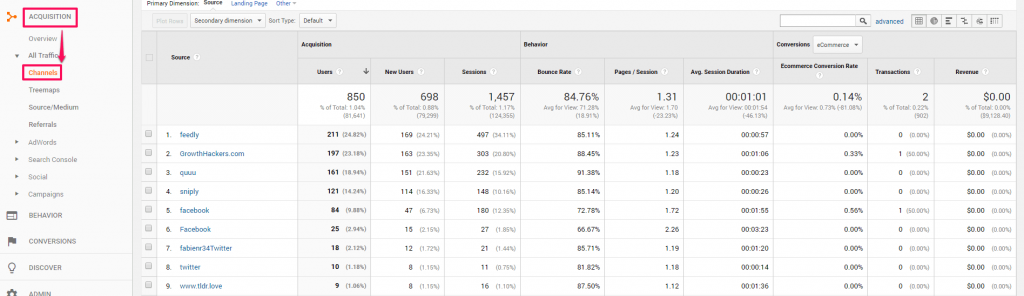

Dan: I think 50% completely changed and I think there’s no longer a choice for an SEO has to become a digital marketer. Internally within our team we’ve completely blended, for example, we’ve completely blended the social ads in SEO process, to the point where my accounts ladies are asking us “Is this invoice towards the paid or organic?” and we say “What’s the difference?”. So when we form a digital strategy, our ads could be serving to increase awareness of a piece of content that could lead to links. Internally, we call it “micro-targeting”. I know it’s a little bit of a bad timing for this topic but effectively we have been using Facebook to micro-target specific users, to influence their opinion and try to make them write about our clients. Targeting people at certain newspapers or, you know, in government or other areas, bloggers …

Did you also try LinkedIn? Because LinkedIn has a professional network where this kind of people can be used in the same way?

Dan: I have tried – too expensive. It has unordinary targeting, I love what LinkedIn’s done with targeting, arguably works better than Facebook for certain industries – obviously not for your moms and dads and common, but for professional world and services and such – very good targeting. I found myself surprised with Twitter. Twitter’s served a great purpose for my SEO projects. Imagine getting links to your clients’ content without doing manual link outreach – how nice is that? I’m not saying I can get that every time and in large volumes, but if you structure an advertising campaign in the right way to send bloggers and journalists to the content, if the content is good enough, if the topic is good enough, it will be picked up. So, effectively, you’re buying impressions, you’re buying clicks, you’re paying for that part, and it’s up to the journalist or blogger to link to that piece of content if they find it link-worthy enough. So that’s where the content quality comes into place, and if your content piece is 5000 words of boring stuff and you don’t get to the point, and it’s all hard to read, it’s a wall of text and you’ve blended everything, they’re just going to leave. So if you’ve got something newsworthy, useful, really valuable, and really easy to digest, and you’ve sent to the blogger or journalist to that page at the right time, you know, you didn’t catch them too late or too early in a day and they’re just about writing about something like that and they’re like “Oh, that’s really useful!” – that’s really hard, but it’s even harder if you’re doing manual begging, link begging, “would you please…?”, “would you mind?” – that’s really nasty work, nobody likes to do that. So I guess I’m trying to pioneer a technique and trying to perfect the technique of micro-targeting with influences through advertising in an attempt to get high-quality content, the exposure it needs to be picked up on an organic level. So I’m paying money, getting links, but not breaching Google’s guidelines.

What do you think when people are choosing an SEO agency to work with, what should they be paying attention to and what should they avoid in terms of what their agency is saying?

Dan: Avoid smoke and mirrors, avoid vague language. If you don’t understand what an SEO is saying to you, if they can’t explain it to you like a five-year-old – I just remember the AJ Kohn, “Blind five-year-old”, that’s the whole point – if you can’t understand what your SEO is saying to you, don’t sign up. If an SEO agency is explaining your strategy to you or their approach, it has to make perfect sense. The communication from them during that initial proposal process has to be crystal clear; it has to make business sense, it has to have passed that logic, basic logic, reasonable main test, and if it’s all too vague and if they’re using just jargon, if their excuses for low performance of the campaign is like “our algorithms, and this and that”, and they’re trying to put too many things in there, stay away. A good SEO agency will explain everything like black and white, and they’ll explain what’s possible and what’s not, where’s the area of opportunity and where there’s not. Recently, to most of my new clients, I have explained that everything we do will be a test. We’ll try this: we’ll put 5,000 dollars into this idea and we’ll let you know if it works or it doesn’t. That’s the best an SEO can do. We do not control Google, we do not know what’s going to happen, we are the weatherman. We can see what’s going on in the landscape there, we can see it might rain, but we cannot make it rain. And that’s the big difference. An SEO that promises the rain is a crook.

Razvan: Yeah, unfortunately, there are a lot of SEOs that did this in the past and probably still do it and yes you’ve got a bad name during this process of evolution.

Okay, what do you think is the next step for Google? What’s the next algorithm, what’s the next area they will focus on with big changes? We recall Penguins, Pandas… What do you think is coming next, something with a similarly big impact – if there is anything coming next, in your opinion?

Dan: We understand that Google is, at this point, beyond what I would describe as petty human tweaks. There might be new engineering ideas and new concepts introduced to the Google’s algorithms, but way beyond that point where human input is the most relevant thing. We already know that Google’s let the machine make up its own mind about whether a website is of a high-quality or of a low-quality, whether a content piece is valuable or not, whether it’s authoritative or not. In the age of unsupervised machine learning, we have absolutely no control, and what we’re going to be seeing is machines teaching machines and self-improving and self-improving and user behavior data will be valuable, traffic will be valuable, your external marketing tactics will be valuable, branding will be super important, understanding when somebody is notable and significant will be important. So I think an SEO needs to look at all the other channels employing a sound marketing strategy in order to persuade Google’s AI essentially that this domain or brand is of significance and authority. There will be less and less room for these manipulative signals that we’re so used to, including links. Links are still the backbone of Google and how Google works, but they’ve given reins to machine learning and that’s game over for little tricks and tweaks and this and that. I think we’re gonna see more and more reliance on Google’s engineering end to machine learning, and we’re going to see a lot more intelligence out of Google.

In fact, there’s an article that I wrote maybe in 2015 that predicts the entire timeline of Google going from a basic search engine to becoming our assistant, to be able to write its own content, advise, represent us in court as a legal representation, and yeah, it’s got an interesting projection. I’ll try to dig up that link and share it with you so you can share with the rest of your viewers. I think it’ll be quite interesting to see if I’m still on the money with my prediction so far because we’re in 2018 now and would be interesting to see how accurate I was or was overly optimistic. We’re not at the Skynet level yet but we’re getting there.

What I would love to see is one proper competitor to Google, whether it’d be Amazon, or eBay, or a new search engine, or you know even Facebook, I’d love to see a proper competitor in terms of, you know, information retrieval and presentation to users. Maybe it comes out of China or Russia, I don’t know, but I would really love for it to exist soon; I’m waiting for that nice surprise. Let’s make a prediction: in the next five years, somebody will come to the world stage and completely surprise us with a disruptive search engine and artificial intelligence technology, perhaps based on quantum computing, cubits, not zeros and ones.

Razvan: Yeah, the problem is that Google is highly investing in quantum computing.

Dan: I hope it’s not Google, I hope it’s … and I love Google guys, they’re sending me gifts. I think there needs to be good competition, and that’s a good thing for Google and a good thing for users if there’s proper competition to Google, so they’re not driven just by their shareholders but by pure competition. Just like Russia and America need a new space race, we need the search engine race to start again.

Razvan: Thank you very much for answering all these questions to our audience.

Do you want to add anything else or end this interview with a specific thing for our audience?

Dan: You know what they say: “When you do a talk, tell them what you’re gonna tell them, then tell them, and then tell them what you told them“, so let’s do that last part then. Remember when you are writing for the web start with the basics, get to the point, go into the further detail, and all the fluff at the end. Split your ideas into multiple paragraphs. Work your highest possible quality content towards your landing pages, commercial ending pages, based on the data, and the data is driven from the Search Console and all your opportunities and potential is there. Look at your CTRs, look at your authority optimization opportunities based on traffic growth and CTR optimization. Keep testing, keep experimenting. You are not just an SEO, you’re a digital marketer, employ every channel. That’s all I have to say.

Razvan: Okay, thank you very much, Dan!

Dan: It’s been an absolute pleasure! I look forward to joining you again some other time.

(All I see is dolla’ signs, dolla’ signs).

(All I see is dolla’ signs, dolla’ signs).